Lessons From TestCon 4

My personal experience at TestCon 4, including key insights on AI in testing, test efficiency metrics, recommended resources, and honest critiques.

On the 17th and 18th April 2025, I attended TestCon 4, the 4th edition of the annual testing conference in Mauritius. It was organized by the Mauritian Software Testing Qualifications Board (MSTQB) and featured speakers from both local and international entities, including the International Software Testing Qualifications Board (ISTQB).

It was my first time attending a testing conference - or any tech conference, for that matter. I had no professional experience with software testing and my knowledge was limited to writing unit tests for pet projects. Despite this, I went there with an open mind and curiosity about what the event could offer.

Glossary from the Conference

During the two days spent at TechCon4 I came across a lot of new terms, as well as some I had simply forgotten about:

- Equivalence partitioning: Input data is divided into groups based on the expectation that the software will behave similarly for all inputs within a group (Wikipedia contributors, 2024).

- Shift-left testing: Approach in software development that emphasizes moving testing activities earlier in the development process (IBM, n.d).

- Fuzz testing: A technique that “pings” code with random inputs in an effort to crash it and thus identify faults that would otherwise not be apparent (Gitlab, n.d).

- INVEST: It stands for Independent, Negotiable, Valuable, Estimable, Small, and Testable and is used to assess the quality of user stories.

- BDD: Behavior-driven development.

- Gherkin syntax: It is used for BDD and has 3 components: Given, When, Then.

- TMMi: Test Maturity Model integration.

- Example mapping: A method for determining the acceptance criteria of a user story before development starts.

- RaaS: Results As a Service which is an evolution of SaaS (Software as a Service).

- RAG: Retrieval Augmented Generation.

- WCAG: Web Content Accessibility Guidelines.

- LCM: Large Concept Models.

- Copyleft: Similar to copyright but mandates that derivative works be distributed under the same license.

- Three Amigos in Agile: A Three Amigos meeting includes a business analyst, quality specialist and developer to establish clarity on the scope of a project (Zhezherau, 2024).

- Cockroach theory: The cockroach theory states that when a company reveals bad news, many more related, negative events may be revealed in the future (Chen, 2022).

- Paperclip paradox: An AI with the task of creating paperclips might become so good at producing paperclips that it redirects an increasing amount of resources toward paperclip production, at the expense of humanity (Gans, 2018).

Artificial Intelligence

The overarching theme of the conference was Artificial Intelligence (AI). Nearly all the talks were related to AI in one way or the other.

Test Case Generation

Speakers from companies like MCB and Accenture explained that they use AI to generate test cases and test data. For example, MCB showcased their model capable of producing BDD tests. The advantage brought by AI is that it can generate a wider variety of test cases (positive, negative, and edge cases) in less time. Emphasis was placed on the edge cases since these are the test cases often overlooked by human testers. However, human testers are still needed to verify the test cases generated.

Moreover, for security reasons, companies are training and hosting their own AI models. No details were provided regarding the costs of the model.

Testing AI Agents

One interesting talk came from Rubric, a quality consulting company, who shared how they tested the output of an AI agent. Since AI is not non-deterministic and can hallucinate, we need a way to evaluate their output.

Rubric implemented an AI chatbot in a banking application and used the Ragas library (Exploding Gradients, n.d) to evaluate their AI. The library provides a lot of metrics for assessing the performance of LLMs. However, this relies on a ground truth which can be hard-coded or come from another trusted AI.

Honestly, I did not see the value in testing an AI agent with the Ragas library. It felt like a chicken-and-egg problem: to evaluate the agent, you need a ground truth—but that “truth” is often generated by another AI. So how confident can we really be that this verifying agent is not just hallucinating too?

AI-Driven Learning

Werner Henschelchen at TestCon 4 (MSTQB, 2025)

Werner Henschelchen at TestCon 4 (MSTQB, 2025)

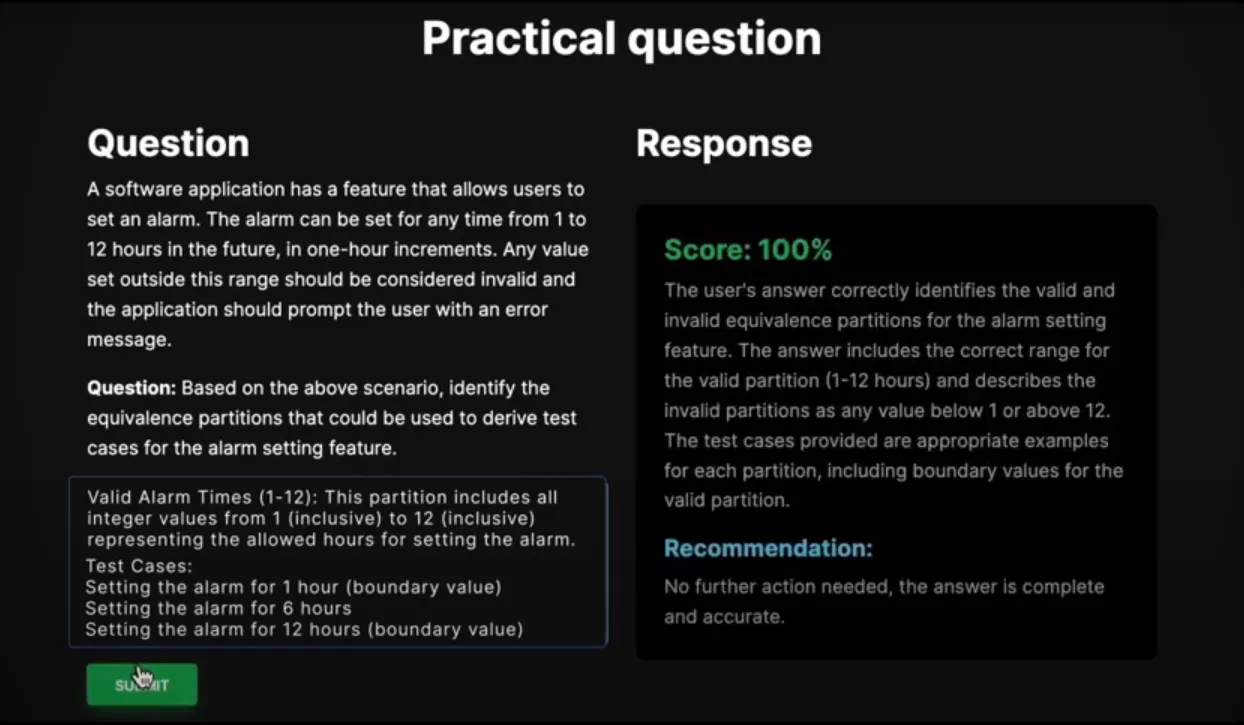

Werner Henschelchen, CEO of the ISTQB exam provider GASQ, gave a talk titled “AI-Driven Learning” where he showcased an AI tool designed to generate practice test questions. In an attempt to address the limited pool of practice questions available for the ISTQB certifications, Werner trained an AI model on the syllabus, past paper questions, and their answers. No details were provided whether this was a fine-tuned model or one that was trained from scratch.

In addition, I was able to use the AI tool in question at an A4Q booth during lunch break. After selecting a topic to practice, the web app gave me a question and a text box for an answer. Then after clicking on a “submit” button, the AI corrected my answer, gave me a score out of 100, and provided recommendations with a sample answer. After using the tool for 10 minutes, I thought that the AI was decent. It was also possible to modify my current answer based on the feedback and resubmit it for a better score. I even attempted a prompt injection attack for testing purposes but failed.

Web interface of the AI learning tool (Alliance 4 Qualification, 2024)

Web interface of the AI learning tool (Alliance 4 Qualification, 2024)

While I do concede that AI can help in learning, as a complete beginner in software testing and based on my previous experience with LLMs, I do not think that this tool is enough on its own. The seemingly correct recommendations provided by the tool were not verified by a professional prior to being shown to me and I had no guarantee that the AI was not hallucinating while I was using it despite the reassurance from the booth operator.

The second day of the conference highlighted the limitations of artificial intelligence when it came to answering a question correctly for a goodies bag: “What are the four key areas of competence that define a person’s skillset?”. Initially, several people simply fed the question to Google/AI on their phone and attempted to answer the question. However, after 10+ incorrect answers, the host was forced to reveal part of the answer which was “Personal competence”. Finally, one participant managed to get the right answer from the ISTQB’s syllabus: “Professional competence, Methodological competence, Social competence, and Personal competence” (ISTQB, 2024, p. 63).

Can AI Replace Testers

Joel Oliviera at TestCon 4 (MSTQB, 2025)

Joel Oliviera at TestCon 4 (MSTQB, 2025)

The talk titled “Yes, AI will replace you” by Joel Oliveira, founder of the Portuguese Software Testing and Qualifications Board and member of the ISTQB, was one of the most eye-opening talks of the conference. Despite the provocative and pessimistic title, Joel presented a plausible scenario where AI could displace software testers.

His first point was that the AI market is booming and AI is bound to get better with time, especially at software testing. For example, in a team of three testers, if two begin leveraging AI to automate significant parts of their workflow, the overall productivity of the team could increase to the point where the third tester is no longer needed. Therefore, fewer humans will be needed for software testing in the future.

He also argued that software testing outsourcing will be negatively impacted. Currently, many software testing jobs are outsourced to developing countries where wages are lower. This cost advantage has made offshore testers competitive in the global market. However, as AI tools become more widespread and accessible, the relative advantage of low-wage labor will disappear.

Unfortunately, likely due to time constraints, Joel did not provide any actionable strategies for software testers to make the most of AI. His conclusion, which sums up to “learn to use AI to keep your job”, is not very useful for most attendees since many key questions remain unanswered, such as where to begin, how to juggle work and learning, and what resources to rely on.

Furthermore, I wondered whether a similar argument can be made for the case that AI will reduce the number of software developers. Big strides are already being made in the form of AI coding assistants to boost developer productivity.

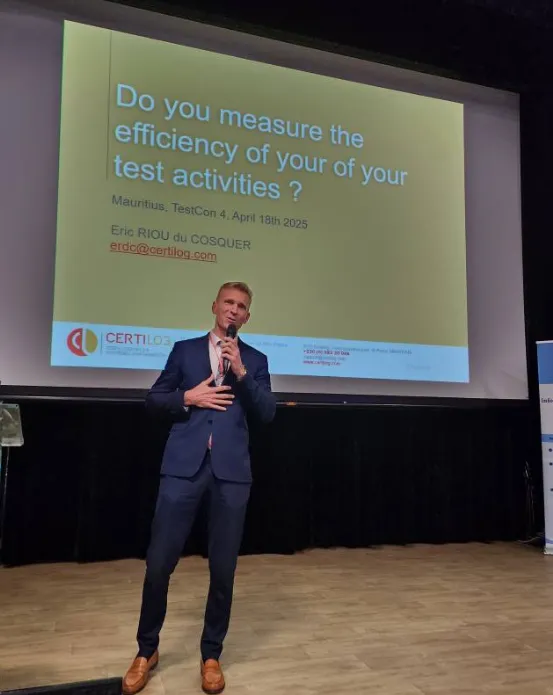

Measuring the Efficiency of Testing Activities

Eric Riou du Cosquer at TestCon 4 (MSTQB, 2025)

Eric Riou du Cosquer at TestCon 4 (MSTQB, 2025)

Eric Riou du Cosquer, a TMMi Lead Assessor and CEO of Certilog, talked on how to measure the efficiency of your test activities. He recommended several metrics and supported them with his personal experience:

- Number (or percentage) of critical failures in production that could have been avoided by test activities.

- Average criticality of defects detected during the first day/week. The Risk-Impact matrix was recommended to categorize defects.

- Number of defects detected in the most important input.

- Average time elapsed between introduction of defect and its detection.

- Average cost of testing per project with some level of quality.

- Average percentage of false positives and false negatives after running tests.

- Average time spent by a tester in a company before leaving.

I found the metrics discussed quite insightful because I had never heard of most of these metrics before.

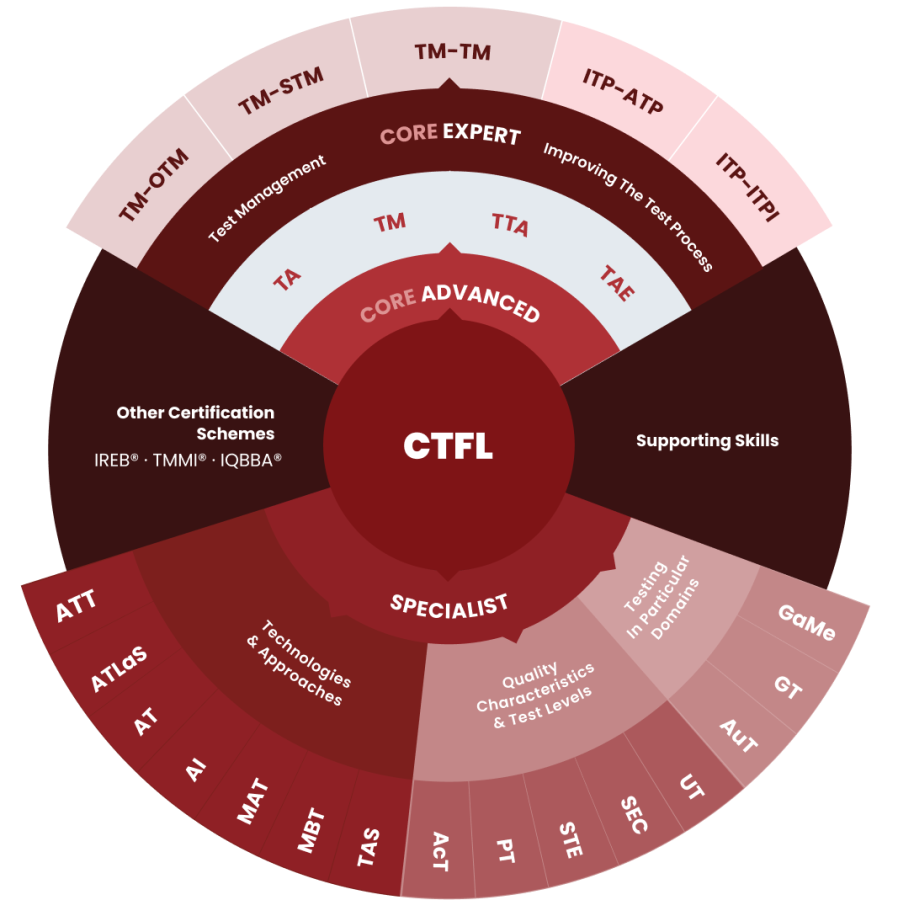

Certifications & Training Providers

The ISTQB offers a wide range of internationally recognized certificates. If you want to get certified, the Certified Tester Foundation Level (CTFL) is your first target. Even though there are a lot of certifications that can demonstrate your testing competencies to an employer, Eric Riou du Cosquer believes that an IT degree is still important.

ISTQB® Product Portfolio (GASQ, 2025)

ISTQB® Product Portfolio (GASQ, 2025)

Here are some information regarding the CTFL certification in the Mauritian context:

- Exams can be carried via remote proctoring or on-site in accredited exam centers.

- A4Q is responsible for authenticating the certifications, while Certilog is a training provider.

- The exam questions are multiple choice and scenario-based. No programming knowledge is required.

- The training costs Rs 28k and the exam itself costs Rs 8k. Certilog was offering a special 30% discount exclusively for TestCon attendees, valid until 31st April 2025.

- It is possible to skip the training phase and go directly to the exam.

- The certificate has no expiration date.

Recommended Resources

During the conference, several speakers shared valuable tools, readings, and resources. Below, I have compiled their recommendations to help you explore new tools.

AI & Performance Tools

- Green Algorithms Calculator (Lannelongue et al., 2021): A tool to help calculate the carbon footprint of your algorithms and help improve sustainability in tech.

- Dora.dev (Google Cloud, 2025): A platform for tracking and optimizing software delivery performance.

- Ragas library (Exploding Gradients, n.d): A library that provides a lot of metrics for assessing the performance of LLMs.

Suggested Readings

- Joel Oliviera recommends reading the Prompting Guide 101 (Google, 2024) to better understand how to craft effective AI prompts.

- Pablo Garcia Munos suggests the paper Software Engineering Metrics: What Do They Measure and How Do We Know? by Kaner and Bond (2004) for a deep dive into metrics in software engineering.

- Olivier Denoo, Vice-President of ISTQB, co-authored a book on software testing automation, published in 2024, along with several other contributors. Some copies of the book were distributed for free at the event.

Accessibility Testing Tools

- Deque – A comprehensive suite for web accessibility testing.

- Wave – A web accessibility evaluation tool that helps identify issues.

- Google Lighthouse – A tool for performing accessibility and performance checks.

- Funkify Chrome Extension – A Chrome extension that simulates several disabilities.

- NVDA Screen Reader – A free and open-source screen reader for Windows, widely used by visually impaired users.

Some Honest Critiques

While the sessions were engaging, there were a few minor aspects about the conference that could have been improved:

- The slide decks were not made available to the public. Having access to it would have made it easier to review the key points.

- Surprisingly, there were very few students and lecturers present, despite the event being held in a university auditorium. This stood in stark contrast to the closing remarks by a university representative, who emphasized that the conference aimed to bridge the gap between academia and industry.

- The food, especially during lunch, did not meet expectations. The tea break snacks were too oily for my taste, and the lunches — dholl puri on the first day and a chicken-and-peas panini on the second — felt underwhelming. A balanced menu might have improved the overall experience.

- I was hoping to see more companies — not just training and certification providers — setting up booths at the entry area, showcasing a wider range of services in the testing space.

Conclusion

My experience at TestCon 4 was positive. In the span of these two days I attended all 15 talks and interacted with a lot of people. I learned a lot about the professional world of software testing and I am looking forward for TestCon 5. One highlight was receiving a book on software testing automation, personally autographed by the authors Eric Riou du Cosquer and Olivier Denoo — a nice souvenir from an inspiring event.

References

- ISTQB, 2024. Certified Tester Advanced Level Test Management Syllabus Version 3.0 [online]. Available at: https://isqi.org/media/56/57/20/1718264547/ISTQB_CTAL-TM_Syllabus_v3.0ALL.pdf [Accessed 24 April 2025].

- Alliance 4 Qualification, 2024. A4Q Practical Tester Launch Video [online]. Available at: https://www.youtube.com/watch?v=T_bpLQmCZHU&ab_channel=Alliance4Qualification [Accessed 24 April 2025].

- Wikipedia contributors, 2024. Equivalence partitioning. Wikipedia, The Free Encyclopedia. Available at: https://en.wikipedia.org/w/index.php?title=Equivalence_partitioning&oldid=1253416407 [Accessed 25 April 2025].

- IBM, no date. What is shift-left testing? [online]. Available at: https://www.ibm.com/think/topics/shift-left-testing [Accessed 25 April 2025]

- Gitlab, no date. What is fuzz testing? [online]. Available at: https://about.gitlab.com/topics/devsecops/what-is-fuzz-testing/ [Accessed 25 April 2025].

- Exploding Gradients, no date. ragas [online]. Available at: https://github.com/explodinggradients/ragas [Accessed 25 April 2025].

- MSTQB, 2025. Joel Oliviera delivering a speech at TestCon 4 [online]. Facebook. Available at: https://www.facebook.com/photo/?fbid=1219899526810959 [Accessed 25 April 2025].

- MSTQB, 2025. Eric Riou du Cosquer delivering a speech at TestCon 4 [online]. Facebook. Available at: https://www.facebook.com/photo/?fbid=1219874713480107 [Accessed 25 April 2025].

- MSTQB, 2025. Werner Henschelchen delivering a speech at TestCon 4 [online]. Facebook. Available at: https://www.facebook.com/photo/?fbid=1218999603567618 [Accessed 25 April 2025].

- Kaner, C., 2004. Software engineering metrics: What do they measure and how do we know?. In Proc. Int’l Software Metrics Symposium, Chicago, IL, USA, Sept. 2004 (pp. 1-12). Available at: https://kaner.com/pdfs/metrics2004.pdf [Accessed 25 April 2025].

- Google, 2024. Prompting guide 101 [online]. Available at: https://services.google.com/fh/files/misc/gemini-for-google-workspace-prompting-guide-101.pdf [Accessed 25 April 2025].

- GASQ, 2025. ISTQB® - International Software Testing Qualifications Board [online]. Available at: https://www.gasq.org/en/exam-modules/istqb-r.html [Accessed 25 April 2025].

- Chen, J., 2022. Cockroach Theory: Meaning, Effects, Examples [online]. Available at: https://www.investopedia.com/terms/c/cockroach-theory.asp [Accessed 25 April 2025].

- Gans, J., 2018. AI and the paperclip problem [online]. Available at: https://cepr.org/voxeu/columns/ai-and-paperclip-problem [Accessed 25 April 2025].

- Lannelongue, L., Grealey, J. and Inouye, M., 2021. Green algorithms: quantifying the carbon footprint of computation. Advanced science, 8(12), p.2100707.

- Google Cloud, 2025. Dora [online]. Available at: https://dora.dev/ [Accessed 25 April 2025].

- Zhezherau, A., 2024. What Are the Three Amigos in Agile? [online]. Available at: https://www.wrike.com/agile-guide/faq/what-are-the-three-amigos/ [Accessed 25 April 2025].

- Denoo, O., Hage Chahine, M., Legeard, B. & Riou du Cosquer, E., 2024. Automatisation des activités de test: L’automatisation au service des testeurs [online]. CFTL. Available at: https://cftl.fr/wp-content/uploads/2024/02/Livre-du-CFTL-2-Automatisation-des-activites-de-test.pdf [Accessed 25 April 2025].